Original Frameworks

These are the lenses I use to see problems that others often miss.

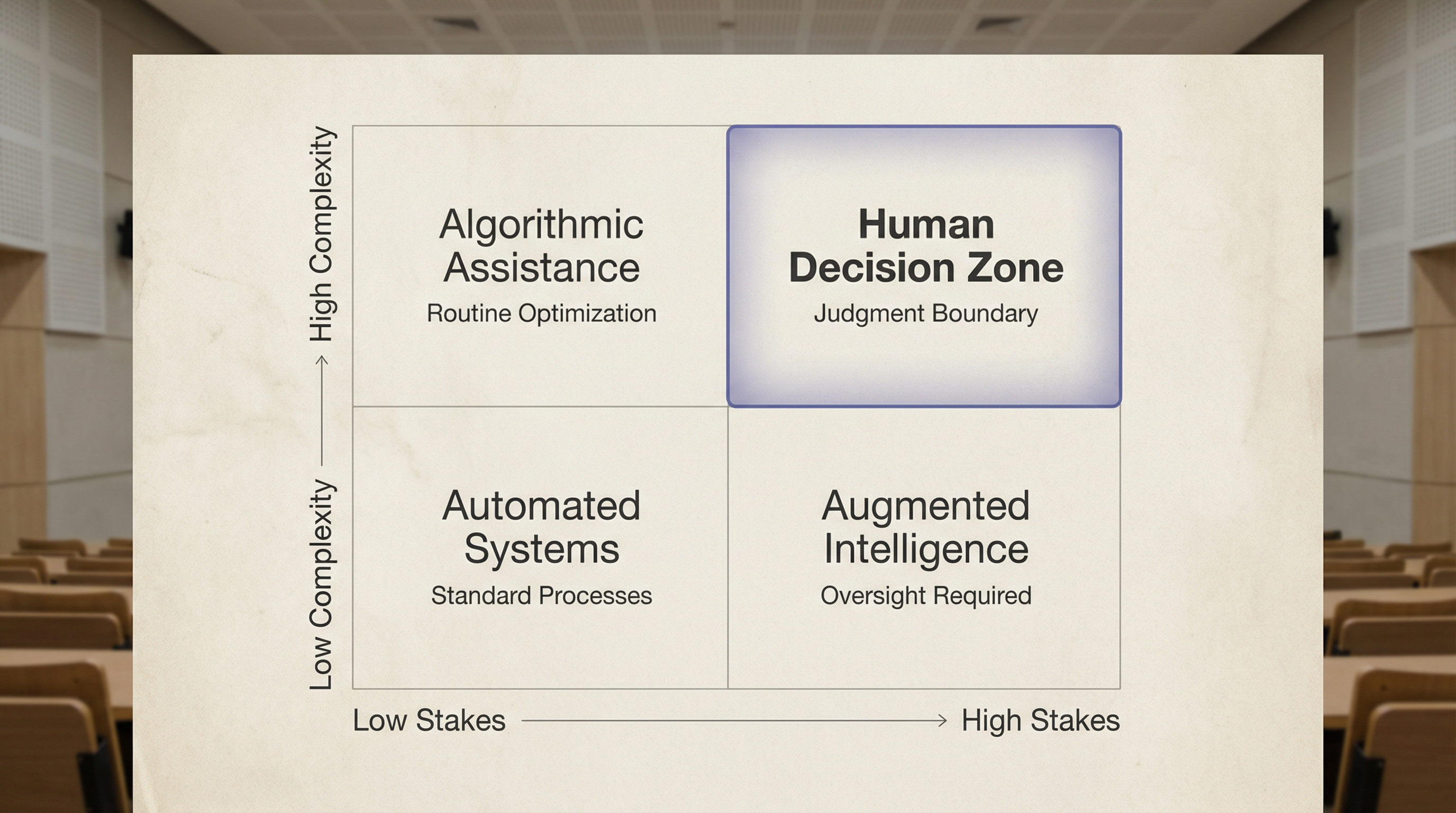

The Judgment Boundary

When to automate vs. when to advise

This model helps to determine the appropriate level of AI involvement by assessing the stakes and complexity of a given task. It prevents the common error of automating high-stakes decisions or under-utilizing AI for low-stakes tasks.

Trust Compounding

Building authority in YMYL contexts

In high-stakes environments, trust compounds slower, but deeper, than scale. You can automate a thousand decisions in a day, but if one fails catastrophically, trust evaporates.

This model outlines the four key principles for building systems where trust accumulates over time.

Transparency

Show the reasoning, not just the output. The "black box" is the enemy of trust.

Calibration

Be honest about the model's confidence. "I'm 60% sure" is more valuable than a false absolute.

Boundaries

Explicitly define limits. "I don't know" is a valid and necessary answer.

Human Override

Ensure a human expert can always intervene. The system serves the human, not the reverse.